Diffusion Forcing: MIT's New Method for Smarter AI Models

MIT's Diffusion Forcing enhances AI by merging diffusion and next-token models, boosting robotics and video generation capabilities.

Researchers at MIT’s CSAIL have developed "Diffusion Forcing," a training technique for sequence models that aims to improve AI capabilities in areas like video generation, robotics, and planning by merging features of diffusion and next-token prediction methods.

AT A GLANCE

MIT's CSAIL introduces "Diffusion Forcing," a technique merging diffusion and next-token methods to enhance AI models.

The approach shows promise in robotics, enabling better task execution even amid visual distractions.

Diffusion Forcing outperforms traditional methods in video generation and planning, such as navigating 2D mazes.

The technique could help robots adapt to new environments by predicting actions and filtering out irrelevant data.

The Limitations of Existing Sequence Models

In AI, sequence models have gained popularity for their ability to generate data step-by-step. Next-token models, such as those behind ChatGPT, generate sequences one element at a time and can vary in length. However, they struggle with tasks that require planning over longer periods. On the other hand, full-sequence diffusion models, like those used to generate videos, excel at future-conditioned sampling but cannot easily produce sequences of varying lengths.

What is Diffusion Forcing?

Diffusion Forcing combines strengths from both models to create a flexible training scheme that enhances AI’s ability to handle complex sequences. The method builds on the concept of "Teacher Forcing," where a model predicts the next token by using the known previous token. It introduces noise to parts of a sequence (similar to diffusion models) while also predicting future steps, thereby cleaning up data and anticipating upcoming actions. This approach allows for more adaptive sequence generation and reliable decision-making across different tasks.

Enhancing Robotics and Video Generation

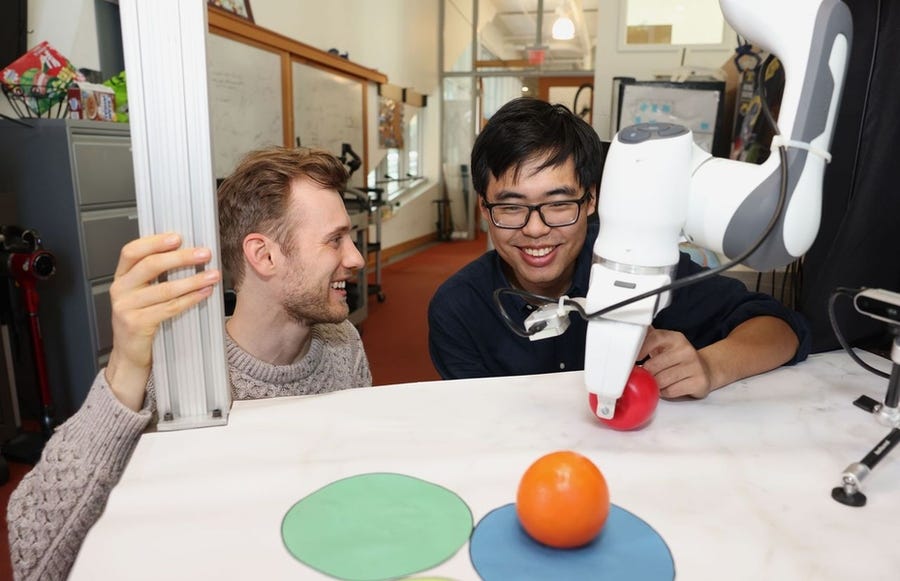

The researchers tested Diffusion Forcing on a robotic arm, successfully completing tasks despite visual distractions. The technique was trained through virtual reality teleoperation, where a robot mimicked user movements to place objects in target spots, even when starting from random positions. The method also outperformed traditional models in generating video sequences, producing consistent and high-resolution frames in environments like Minecraft and digital simulations, whereas comparable models struggled to maintain quality.

Applying Diffusion Forcing in Decision-Making

The new approach allows for planning tasks over both short and long horizons, adjusting to the uncertainty of the distant future. For instance, Diffusion Forcing outperformed six other methods in navigating a 2D maze by generating more effective plans to reach a goal. This flexibility could be beneficial for future AI-driven applications, including robotics that adapt to dynamic environments.

Training Robots to Overcome Challenges

Diffusion Forcing could help robots generalize across tasks and environments by using the method to filter out irrelevant data and predict the most likely next actions. In robotics, this might allow household robots to perform tasks like organizing objects, even when encountering unexpected visual obstacles.

Toward a "World Model" for Robots

According to lead researcher Boyuan Chen, the team's ultimate goal is to create a "world model" AI capable of simulating real-world scenarios. By training on extensive datasets, such as internet videos, robots could be equipped to perform unfamiliar tasks by understanding what needs to be done based on their surroundings.

Scaling Up and Future Research

The researchers aim to scale up Diffusion Forcing with larger datasets and the latest transformer architectures to boost its capabilities. The approach could potentially evolve into a comprehensive AI framework that integrates video generation with motion planning.

Looking Forward to NeurIPS

The MIT team, supported by organizations such as the U.S. National Science Foundation and Amazon Science Hub, will present their findings at the NeurIPS conference in December. They continue to explore ways to bridge the gap between robotics and AI-driven decision-making, laying the groundwork for the next generation of autonomous systems.

The research was led by Boyuan Chen and Vincent Sitzmann, in collaboration with MIT and CSAIL affiliates, and it highlights the potential of blending different AI techniques to improve performance across multiple domains.